JuMi is a revolutionary desktop artificial intelligence assistant that supports users to deploy and run various Large Language Models (LLMs) in a completely offline environment. By integrating open-source inference frameworks, JuMi injects cutting-edge AI capabilities directly into your devices - without cloud dependencies, eliminating the risk of data breaches, and giving you absolute control over intelligent assistants. Whether it's efficient office work, creative inspiration, or technological exploration, JuMi can provide a seamless localized AI experience while protecting privacy.

Core functions

Privacy first design

100% offline operation: All model calculations are completed locally, and conversation records never leave your device

Zero data upload: completely avoiding privacy risks of cloud services and meeting the needs of sensitive scenarios such as finance/healthcare

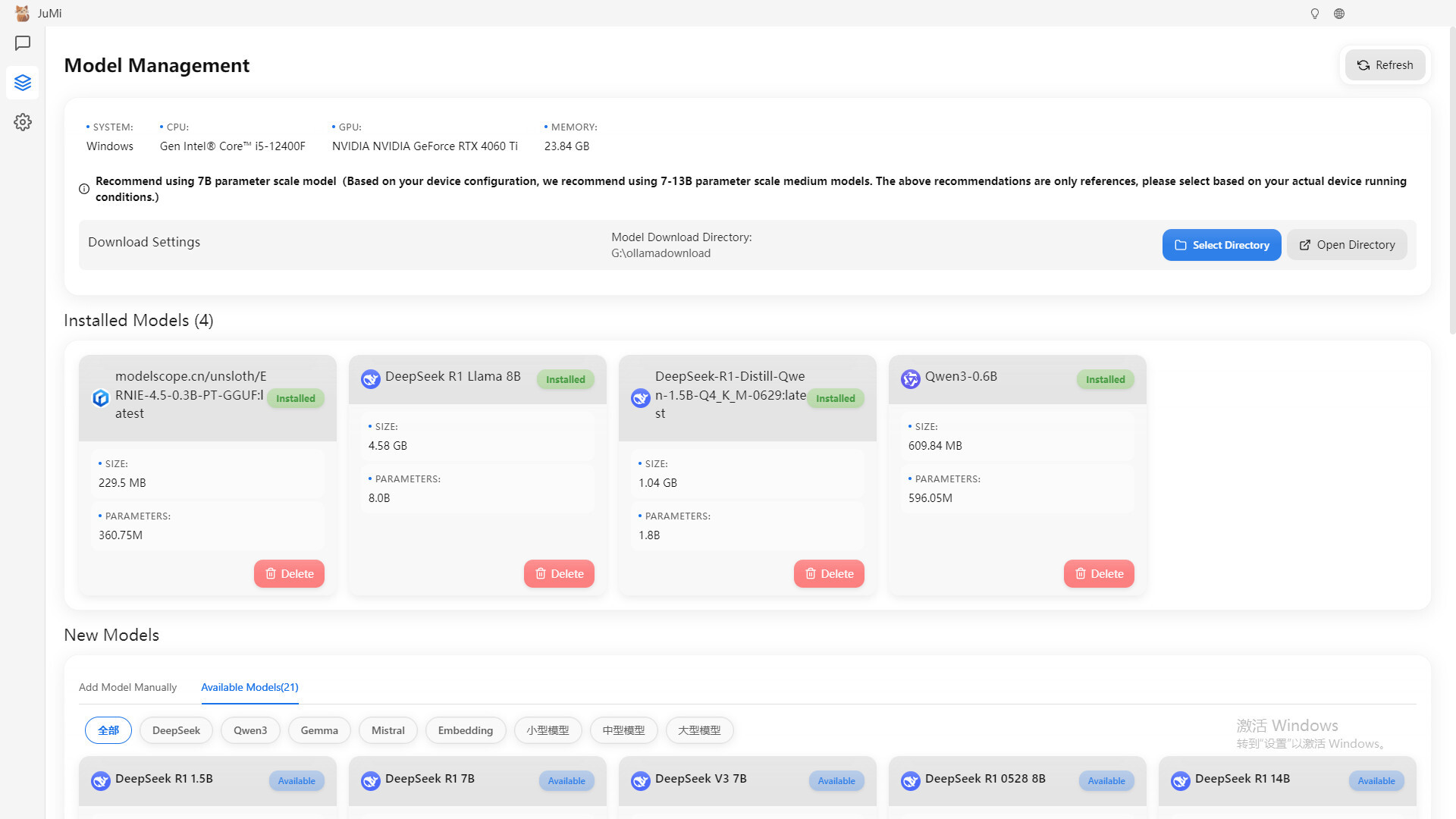

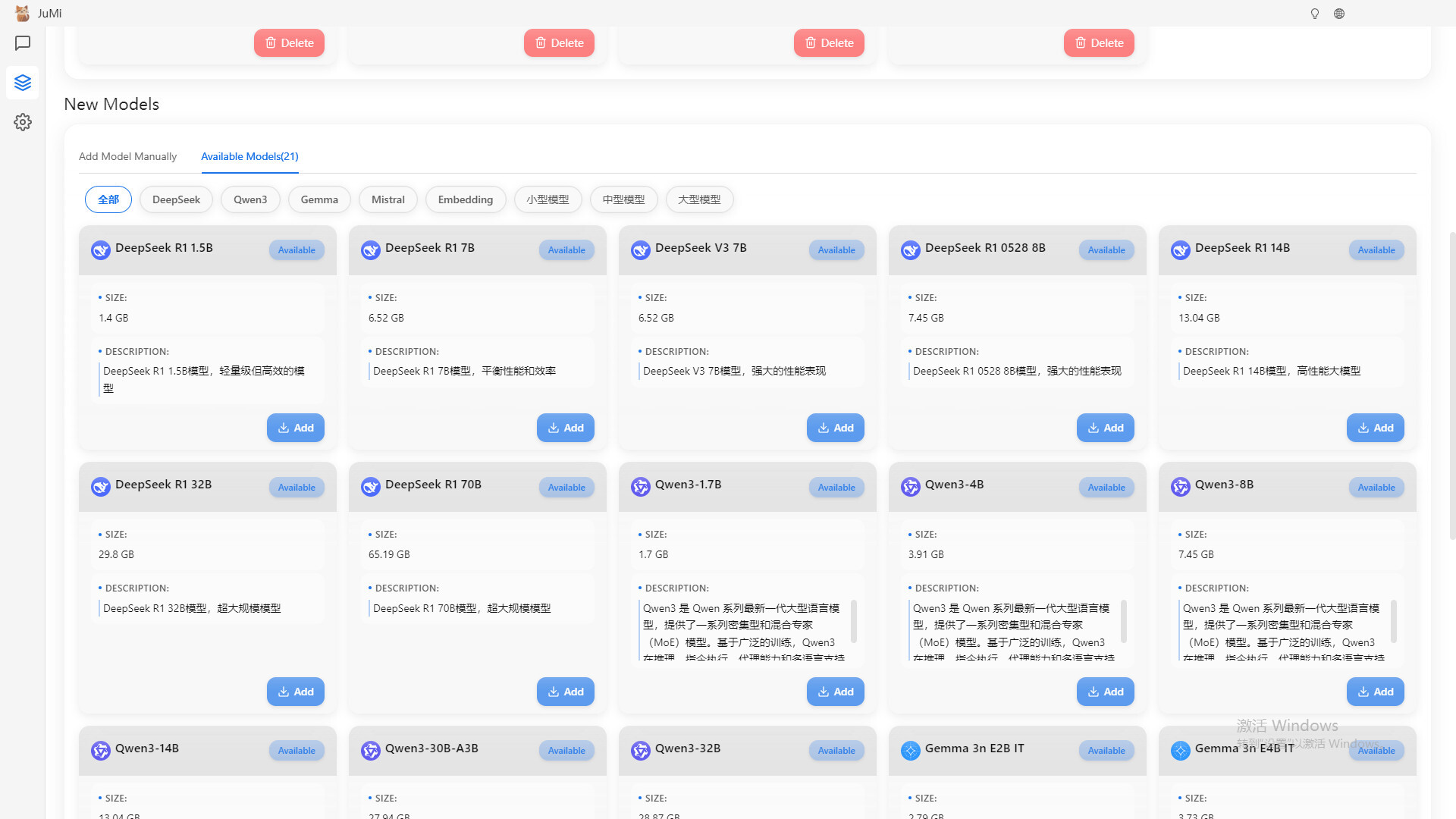

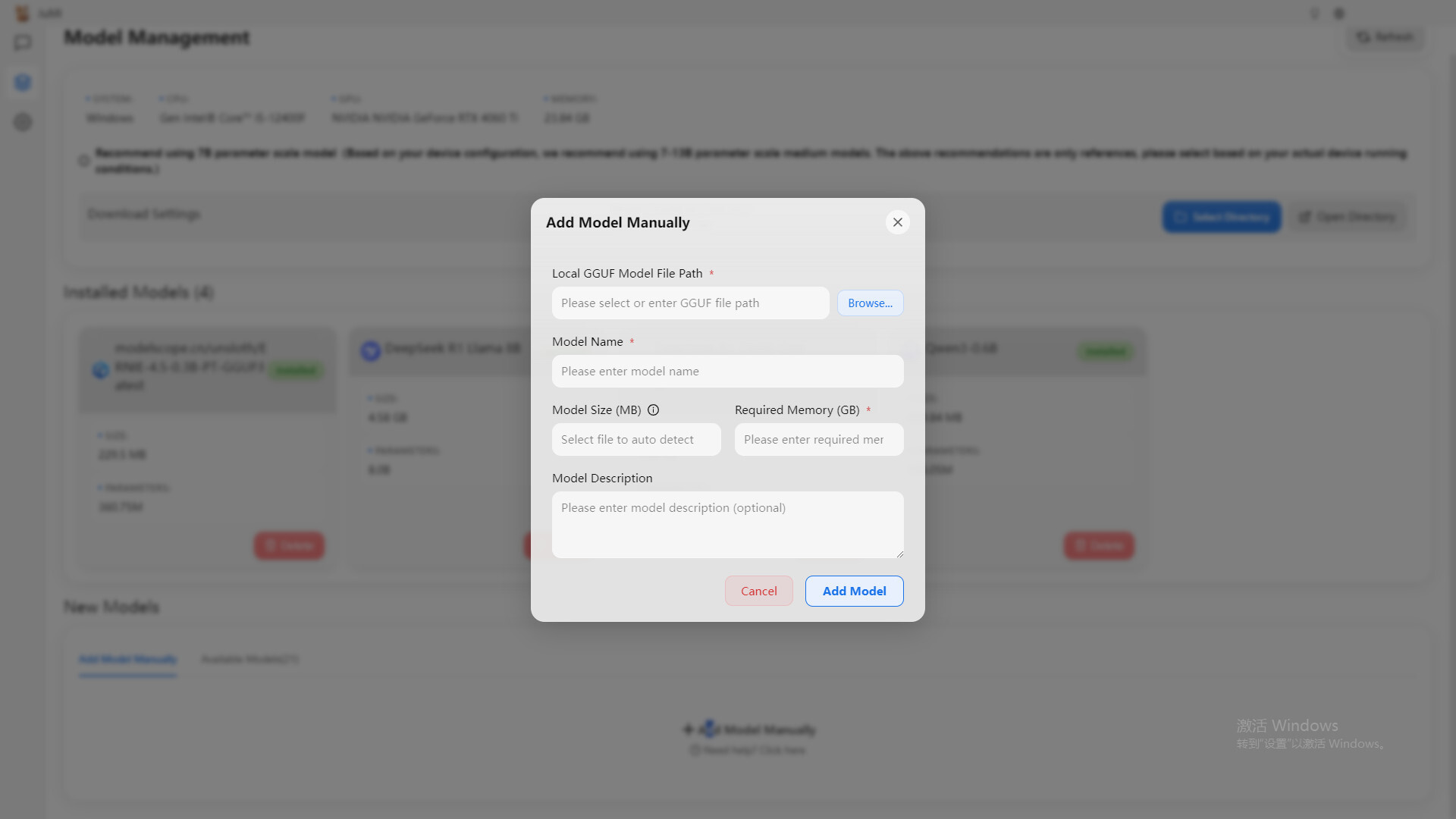

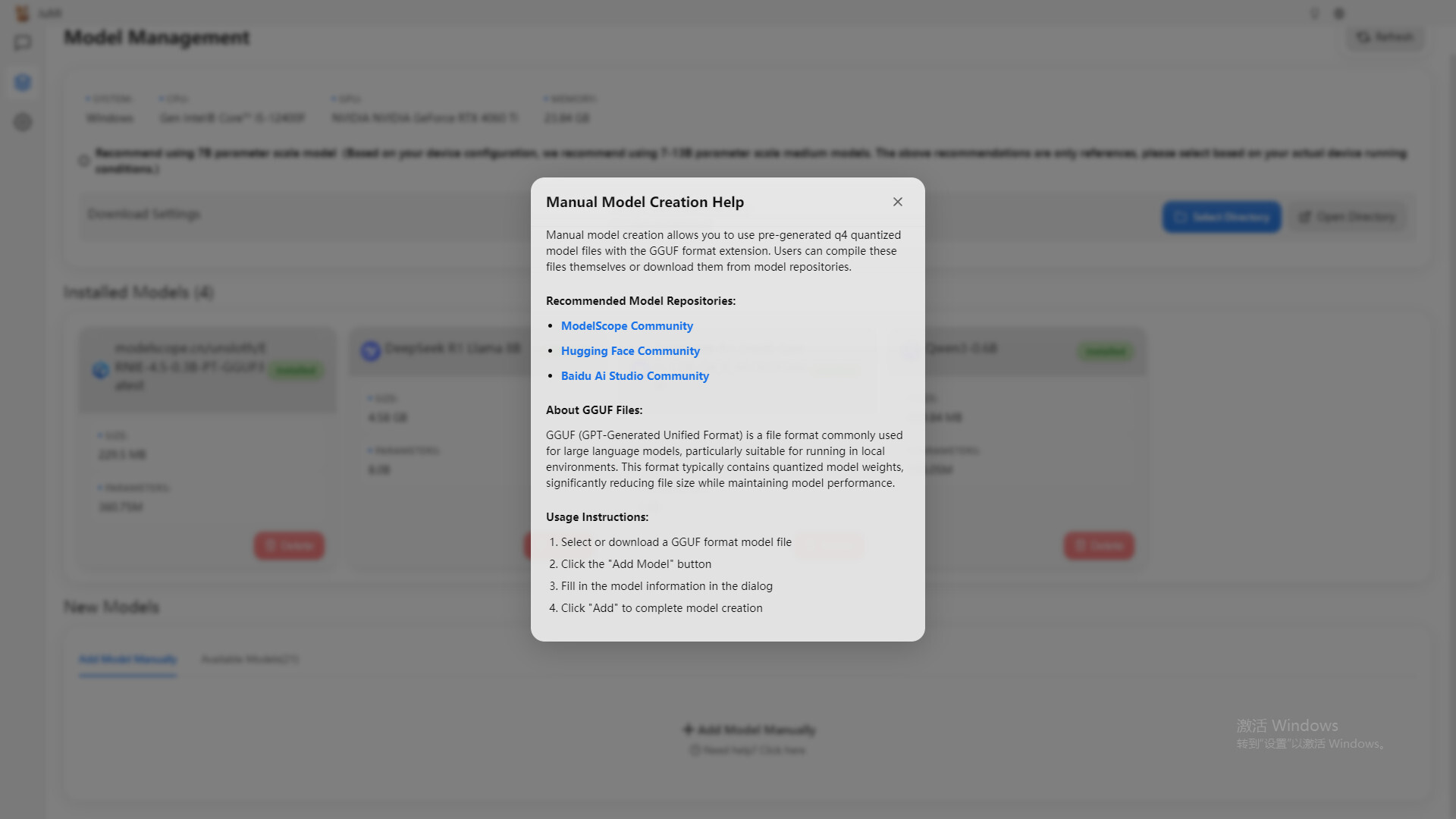

One click large model management

Massive model library: Built in selected open-source models (7B to 70B parameters), covering multilingual dialogue, code generation, academic research, and other directions

Minimalist deployment: graphical interface automatically handles model downloads, dependency installations, and version updates, bidding farewell to command-line operations

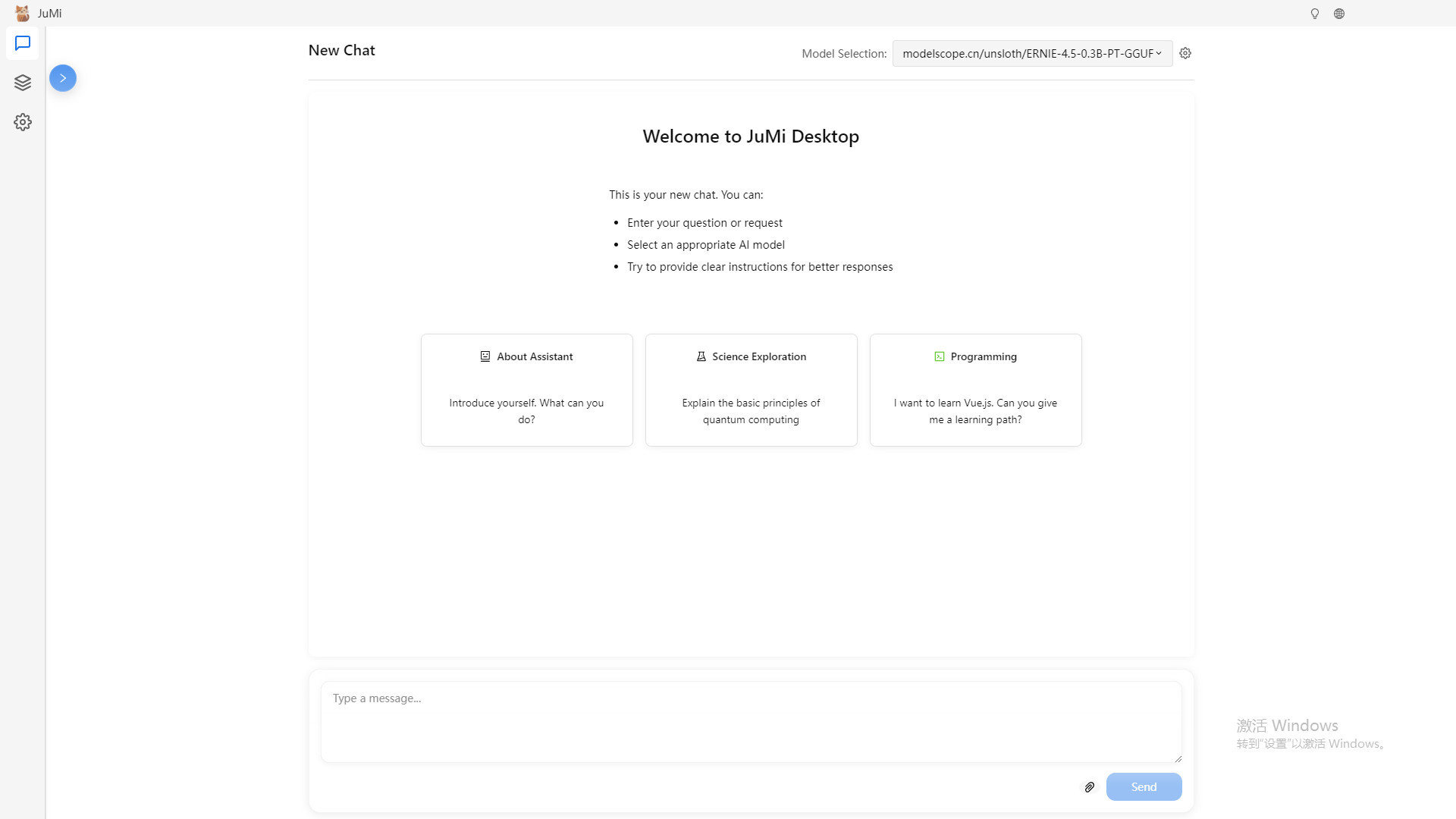

Intelligent interactive experience

ChatGPT like dialogue: supports multiple rounds of context understanding, file content parsing (PDF/TXT/code), and instruction following

Custom role settings: can train exclusive AI personalities, adapt to personalized scenarios such as educational tutoring, creative writing, etc

⚙️ Open ecological support

Hardware Freedom Compatibility: Optimized CPU/GPU resource scheduling, supports NVIDIA CUDA, AMD ROCm, and pure CPU mode

Developer friendly: Provides API interfaces and plugin systems that can be extended to local knowledge base retrieval and automated script linkage